Technology should be amoral. Morality is difficult stuff that should be left to humans to deal with. At best, technology can help inform humans to make moral choices, but to argue, as both sides in this debate seem to be doing, that technology can be moral in itself is to take a dangerous step.

We have millennia of literature arguing against devolving moral choice to simple mechanistic reasoning, from the Solomonic compromise, through the cautionary tales of golems to the modern myths like Brazil.

Indeed, lets look at contemporary cases where we are asked to devolve moral judgements to machines. David Weinberger's Copy Protection is a Crime essay sets out this category error clearly - that DRM eliminates leeway by handing control to stupid mechanisms:

In reality, our legal system usually leaves us wiggle room. What's fair in one case won't be in another - and only human judgment can discern the difference. As we write the rules of use into software and hardware, we are also rewriting the rules we live by as a society, without anyone first bothering to ask if that's OK.

David Berlind and Walt Mossberg have picked up on this too, realising that code is not good at subtlety and judgement.

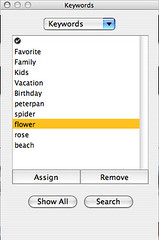

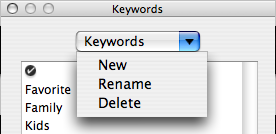

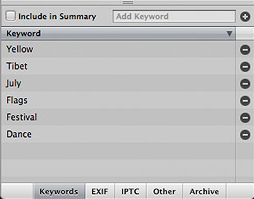

Similarly the Censorware Project and the OpenNet Initiative document the shortcomings of using computers to decide whose idea can be seen online by crude keyword filtering.

When designing software and the social architecture of the web we do need to think about these issues, but we must eschew trying to encode our own, or others, morality into the machine.